| Home |

| Bio |

| Research |

| Teaching |

| Publications |

| Research

Group |

| Collaborations |

| Talks |

Visual

Information Processing in Multi-Camera Systems

|

|

|

|

|

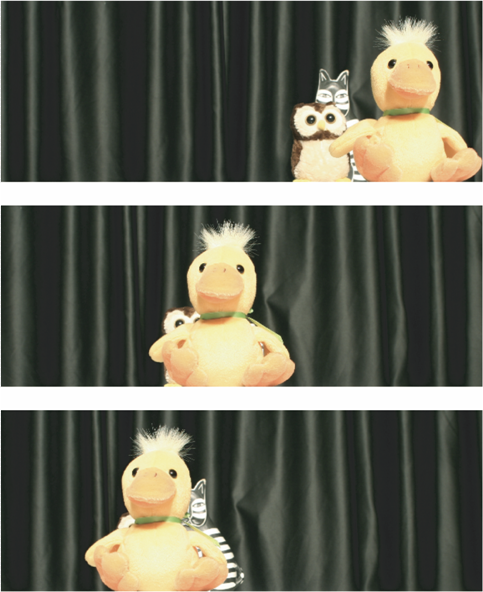

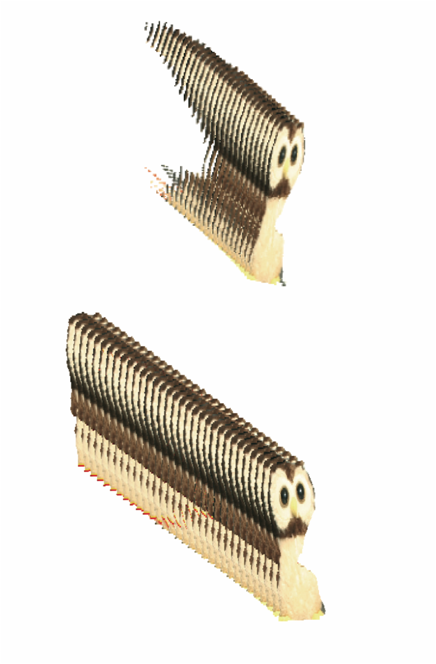

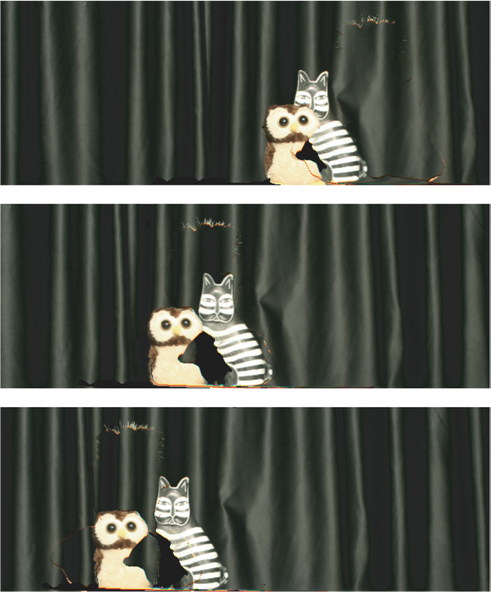

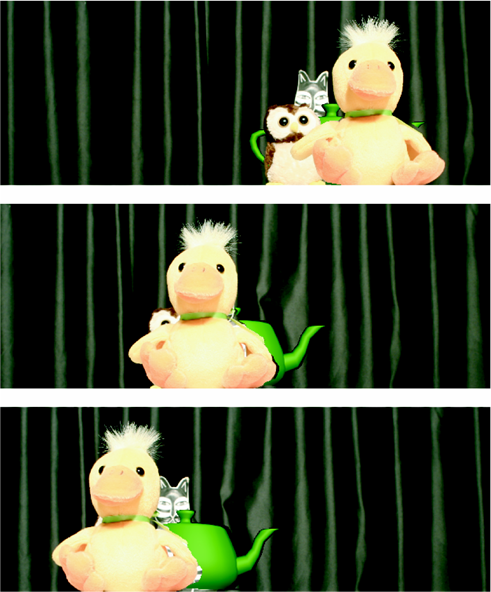

| (a) | (b) | (c) | (d) |

Figure 1(a): Three images of a set of 32 multi-view images. Fig 1(b): An example of the hypervolume extracted and then interpolated using the level-set method. Fig1(c): The duck hypervolume is removed and what is 'behind' is estimated. Fig1.(d): A synthetic object is inserted.

To probe further check out the following videos and Jesse Berent web-page.

Main Publication:

- J. Berent and P.L. Dragotti, Plenoptic Manifolds: Exploiting Structure and Coherence in Multiview Images, IEEE Signal Processing Magazine, vol. 24 (6), pp.34-44, November 2007.

- J. Berent and P.L. Dragotti, Unsupervised Extraction of Coherent Regions for Image Based Rendering, British Machine Vision Conference (BMVC), Warwick, UK, September 2007.

- J. Berent, P. L. Dragotti, "Segmentation of Epipolar-Plane Image Volumes with Occlusion and Disocclusion Competition," in Proceedings of IEEE International Workshop on Multimedia Signal Processing (MMSP'06), Victoria, Canada, October 3-6, 2006.

PhD Students: Jesse Berent and Yizhou (Eagle) Wang.

Collaborations and Interactions: M. Brookes (ICL), M. Vetterli (EPFL).

| Department Home |